Sequencing: A perfect storm

How drops in sequencing prices have revolutionised biology

The cost of DNA sequencing has fallen precipitously over the last 20 years, with the price of a human genome sequence falling almost a millionfold since it was first published in 2003. DNA has inherent utility as a storage medium, meaning this fall in price has opened up new areas for innovation previously unimagined.

Sequencing costs largely owed their astonishing drop to the “Next-Generation Sequencing” technology, ultimately acquired by Illumina. Ex-team members moved on to found Oxford Nanopore, producing a miniaturised sequencer as big as a mobile phone, which can be plugged into a computer’s USB port. Aside from their obvious potential for field use, they produce extremely long “read lengths” to cover the genome – particularly useful for studying plants, which have some of the longest genomes.

The drop in sequencing costs has lead to a rise in the number of companies such as 23andMe offering either to sequence or genotype individuals, and provide information on everything from your genealogy to predispositions to genetic disorders. Such analyses always come with caveats since such data cannot quantify the complex interaction between genes, the environment and other extrinsic factors. Some fear that more sinister uses may emerge from this, including the potential for insurers, employers or even governments to use this information in decision-making.

Low-cost sequencing enables swathes of previously inaccessible genomic data to be made available to researchers, but the utility of DNA as a programming material has only emerged with the corresponding cost reduction in the synthesis of the molecule. Gene synthesis is now a commercial service provided for as low as a few cents per base pair. DNA storage is a particularly fascinating application, where information is encoded through synthesis, then decoded through sequencing. DNA’s four base system (GCAT) means a much higher density of information can be encoded compared with conventional binary data storage systems. A number of companies and researchers are exploring this possibility: earlier this year, Harvard scientists used a gene editing tool known as CRISPR to encode a movie into a DNA molecule, insert it into living bacteria, then sequence the DNA to view the movie.

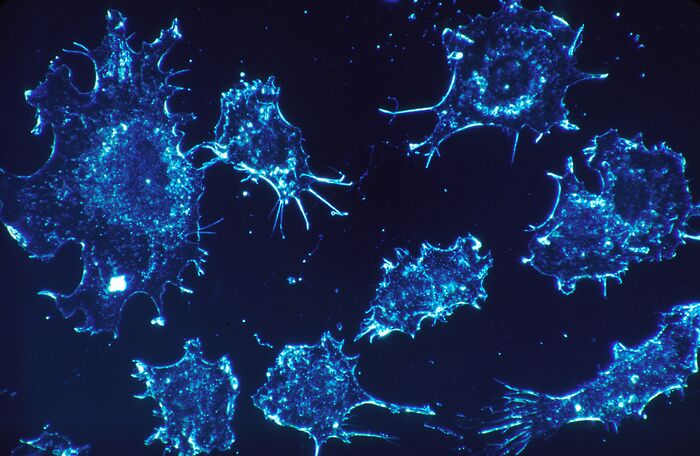

Despite the cost reductions, only a handful of finished genomes exist today. De novo DNA synthesis is currently an expensive and challenging art. While collecting data is feasible and affordable, assembling such vast quantities of data is difficult. Many “short reads” cannot be reconstructed faithfully into whole genomes due to long repetitive sequences. We are currently witnessing a perfect storm in sequencing, where data analysis, processing power and sequencing costs have reached affordable and powerful enough levels to allow for high throughput and meaningful analyses into disease, evolution and more across all of life

Features / How sweet is the en-suite deal?13 January 2026

Features / How sweet is the en-suite deal?13 January 2026 Arts / Fact-checking R.F. Kuang’s Katabasis13 January 2026

Arts / Fact-checking R.F. Kuang’s Katabasis13 January 2026 News / SU sabbs join calls condemning Israeli attack on West Bank university13 January 2026

News / SU sabbs join calls condemning Israeli attack on West Bank university13 January 2026 Comment / Will the town and gown divide ever truly be resolved?12 January 2026

Comment / Will the town and gown divide ever truly be resolved?12 January 2026 News / 20 vet organisations sign letter backing Cam vet course13 January 2026

News / 20 vet organisations sign letter backing Cam vet course13 January 2026